BlueSpace Takes On a Bendy Bus

“This incident was unique..” says Cruise.

Was it though? Not for us.

Source: Forbes

“Fender benders like this rarely happen to our [autonomous vehicles], but this incident was unique” - founder and CEO Kyle Vogt (source: Cruise blog)

Never fancied that the back of a bus could be “unique”. What was so unique that a seasoned AV company like Cruise with millions of miles driven in SF over many years couldn’t handle?

On March 23rd, a Cruise robo-taxi rear ended a San Francisco Muni bus, triggering a recall filed with NHTSA (National Highway Transportation and Safety Administration) for its fleet of 300 robo-taxis. In recent news, a local AV expert noted, “The bus has an unusual shape because it is two sections, so the movement was not something it had in its scenarios…[they are] still training the software on how to react to different scenarios.” (source: KRON4)

When Reality Falls Outside of the AV’s Training Data Set…

What happens when a self-driving car sees something it’s never seen before? It can fail. When it fails, the consequences can vary from fender benders and blocked traffic to more severe accidents that have unfortunately led to fatality, as evidenced by the Uber ATG accident in Arizona.

Although these buses are a common sight where robo-taxis operate in San Francisco and despite building in specific assumptions about articulated buses to its software, as Cruise reported to NHTSA, Cruise couldn’t account for all corner cases (see the detailed analysis below). Thankfully, no one got injured – this time.

According to Cruise’s root cause analysis, “the AV reacted based on the predicted actions of the front end of the bus, rather than the actual actions of the rear section of the bus.” (source: Cruise blog)

Incident Redux - BlueSpace.ai Version

Could this have played out differently? At BlueSpace, we handle the long tail really well as our 4D Predictive Perception’s motion models work independently of what the object is. So we took our test vehicle to the site of the crash on Haight Street in San Francisco.

Not only was this the first time the BlueSpace system saw an articulated bus, it was also our first time on the streets of San Francisco. Given our motion-first perception approach, our software works immediately in a new location - whether in bustling Las Vegas, dense Tokyo, or off-roading in Nevada.

No fine-tuning specific to articulated buses or to San Francisco driving has been performed. Nothing in the BlueSpace software depends on knowing what an “articulated vehicle” is – or even what a “bus” is. Our software approach does not depend on classification nor its associated assumptions. We segment and solve for motion without getting bogged down in figuring out what it is or on built in assumptions that could lead to incorrect prediction.

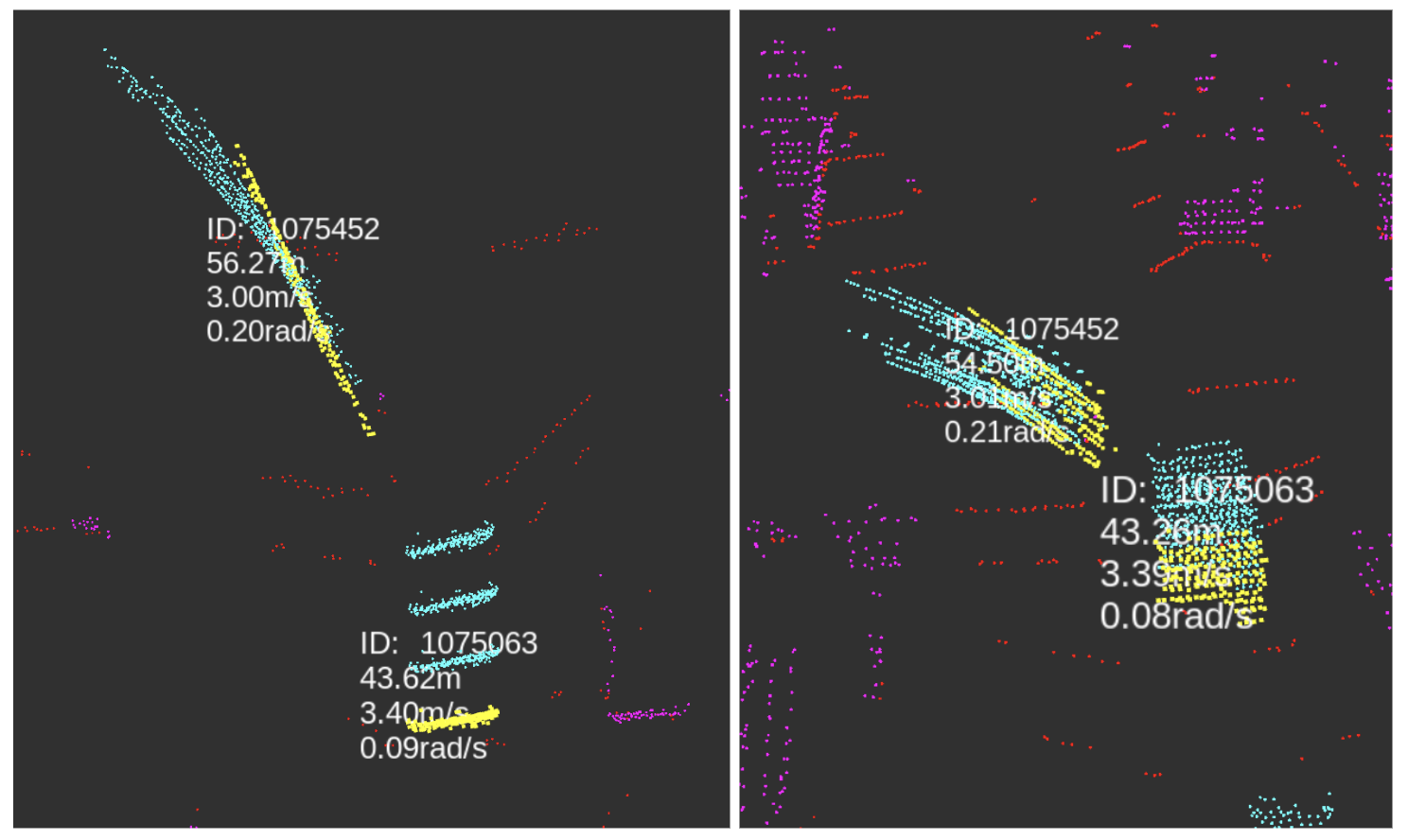

Here’s an articulated or “bendy” bus making a left turn.

Here’s how our software sees the bus during that turn. Note the two distinct motions detected by our system - in yellow and blue.

Notice in both cases, the front and rear ends of the bus are tracked separately despite the fact that it’s one vehicle. Bendy buses, like tractor-trailers, have two distinct bodies. That means that at times, the two pieces actually move differently. Our motion-first 4D Predictive Perception correctly detects the different motions, thus enabling accurate prediction and motion planning.

Here is a more subtle example, where the bendy bus is maneuvering around a parked vehicle. Again the motions of both parts are tracked separately, even when the motions are similar.

Of course, there are times when when the bus is traveling straight or is in the middle of a turn, the halves of the bus start following the same trajectory as you can see in the image and our software video below. In these cases, the bus can be tracked as one object.

See it here in one-go:

Getting It Right: First vs. N-th time

Once again, this was the first time the BlueSpace system encountered an articulated bus. There were no assumptions built in nor training on how an articulated bus could, would, or should move. It’s a real time optimization engine to detect the motion as accurately as possible.

Despite the years of training in the city, and many miles driven, Cruise’s AV system wasn’t ready for this. Which begs the questions: how can one make autonomy systems even more robust? How can we explain and scale these systems such that safety is generalizable from a first principles approach?

BlueSpace presents the solution. We do not wait to learn reactively from a crash to address these corner cases. Bluespace 4D Predictive Perception’s zero-shot test with articulated buses highlights how taking a different approach to perception can address a whole class of failure modes before they happen. Elon was wrong, lidar is not a fool’s errand, it’s chasing one edge-case after another that can be “expensive” and “unnecessary.” They can call it “rare” or “unique” and that special circumstances led to the failure mode, to BlueSpace it’s all the same. It is the case of getting to accurate motion consistently, reliably, and generically.

Let’s work together to make automation and autonomy safer for all.

#GetItRight #FirstTime #MotionAccuracyForSafety